Latest Job Opportunities in India

Discover top job listings and career opportunities across India. Stay updated with the latest openings in IT, government, and more.

Check Out Jobs!Read More

AI’s AI Energy Risk Application Raise the Series Series attacks by exposing user data –

Publishing views: 1

- Wondershare Repair is an artificial intelligence powder tool for improving images and videos. It may be inadvertently violated its privacy policy by collecting and maintaining private user images. The application code contained the symbols of access to the very lenient cloud due to poor development, security and processes (Devsecops).

- Both reading and access to special cloud storage were conducted through the extremist cloud accreditation data in the application. In addition to customer data, open cloud storage guarantees dual software, container photos, artificial intelligence models and company source symbol.

- The attackers can carry out complex supply chain attacks by processing implementable files or artificial intelligence models using risk access. Such an attack may benefit from signed software updates or artificial intelligence models to spread harmful loads for accredited users.

Organizations in the era of artificial intelligence should take into account two decisive security factors: the safety of publishing the artificial intelligence model and the consistency between the company’s privacy policy and real data processing procedures, especially with the applications operating on behalf. In one cases, Wondersharepairit, AI’s photo editing program, was collected, stored, and leaked unintentionally due to insufficient development, security and operations procedures (Devsecops), in violation of the company’s privacy policy, according to the famous research from the amazing institution.

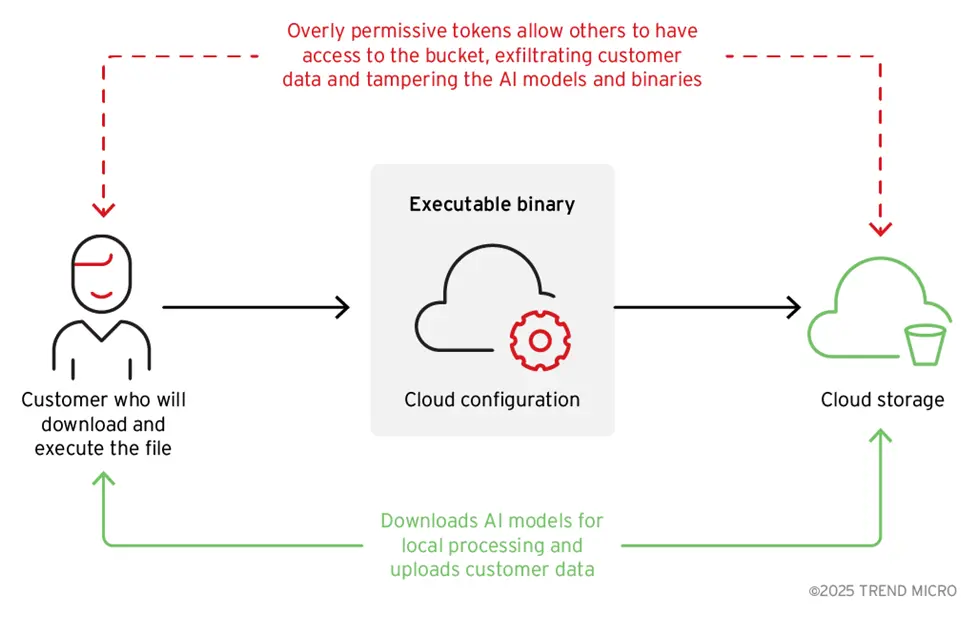

As shown in Figure 1, the program shows that user data will not be saved. This also responds to its website. However, due to the security laps, we noticed that the user’s private photos were saved and then published.

According to our investigation, the application source code contained an excessive cloud icon as a result of subsecops. The sensitive data preserved in the cloud storage bucket has been published through this distinctive symbol. In addition, since the data has not been encrypted, anyone with primitive technical experience can access, download and use against the company.

As we saw in previous studies, developers ignore safety instructions frequently and merge the excessive liberal cloud accreditation data directly in the code.

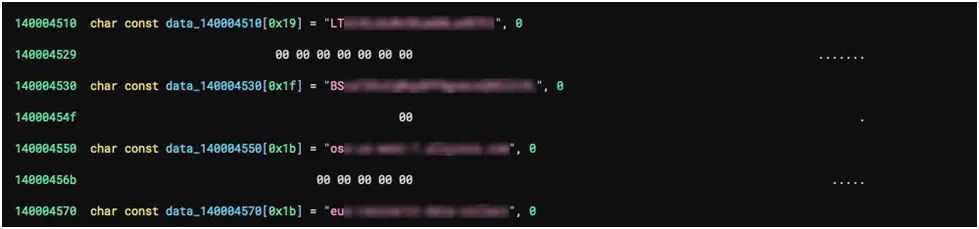

In our case, the executive duo contained accreditation data (Figure 2). Although this method may seem practical, as the back interface processes and user experience are simplified, if it is not implemented properly, it endangers the company to a serious danger.

It is necessary to secure the entire architecture: it is possible to avoid the catastrophic situations in which the attackers download, examine, and use them for purposes that have nothing to do with their intended functions by clearly identifying storage purposes, creating access control elements, and making sure that the credits data are only given the statements they need.

Although coding of distinctive symbols to access cloud storage with writing permissions directly in diodes is a typical approach, most applications are strongly restricted by the distinctive symbol permissions, and for this reason they are used for applications or metric gathering. Data can be written to cloud storage, for example, but it cannot be recovered.

Figure 2. Display the weak implementation of the use of cloud storage

The source of the sensitive code is unknown; A robot coding artificial intelligence or a person may be. Regardless, companies should be particularly cautious while using cloud services. For example, the breach of the distinctive symbol of individual access can enable representatives of the threat to introduce harmful code in the distributed software, which can start the supply of the supply chain. These types of leaks often have catastrophic results.

As of now, we haven’t heard anything from the seller despite our pre -emptive attempts to reach it. The seller was informed of these security gaps in April. Immediately before going, the seller was also given access to the final draft of the blog.

There was a very famous source to reveal the first weakness. On September 17, weaknesses were revealed and Cve-2025-10643 and CVE-2025-10644 names were given.

Bilateral analysis: Exposure to accreditation data and data leakage

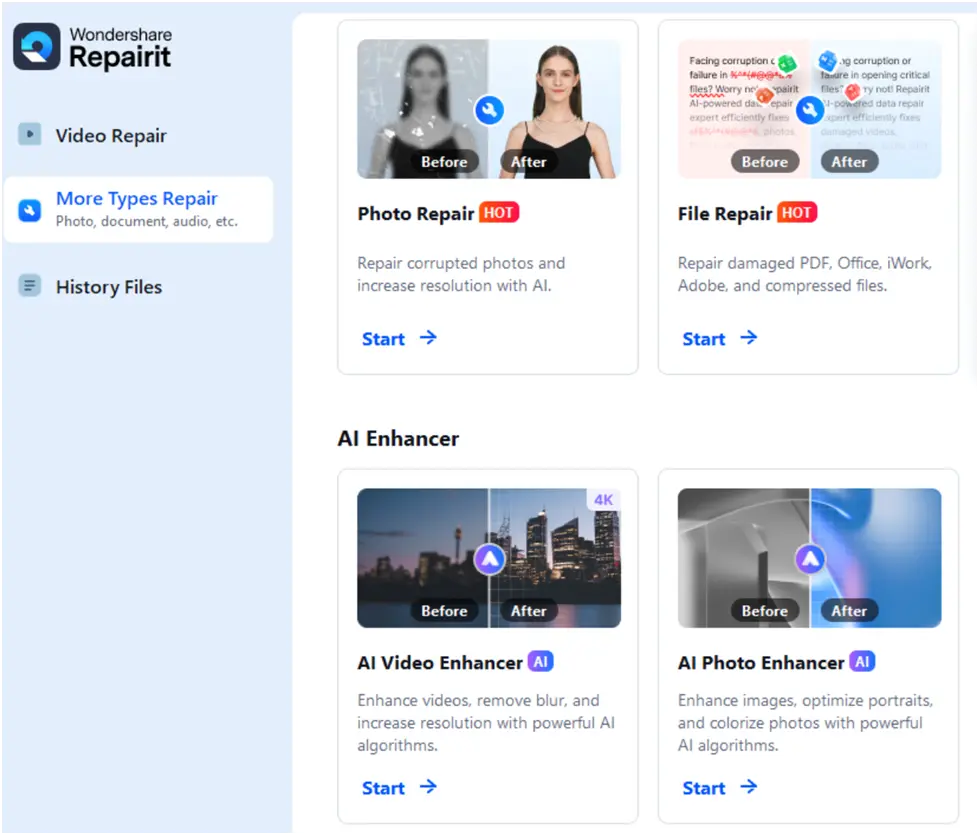

The Binary application was downloaded, a customer application that was widely announced on the official website of the business as a strong and easy to use tool to install damaged images and films using patented methodology and artificial intelligence as a basic engine, as the discovery began (Figure 3).

Figure 3. Wondersharepairit is the most prominent and advertisement

Bilateral analysis showed that the application uses a cloud storage account with hard -line accreditation data. The storage account has not been used only to download artificial intelligence models and application data; We found that the account also contains many signed executive materials developed by the company. She also had a sensitive customer data (Figure 4), all of which could be accessed due to the definitions of storage of cloud organisms (URL addresses and API’s end points), secret and key access identifiers, and the names of the specified bulldozers in the two.

Figure 4. The bilateral analysis that shows the definition of the cloud, the secret, the address, and the name of the bulldozer

The accreditation data that was given reading and access to the bucket is also coded in harmful programs, according to the additional investigation. Artificial intelligence models, containers and diodes of other products are stored from the same company, text programs, source code and client data (such as pictures and videos) in the same cloud storage.

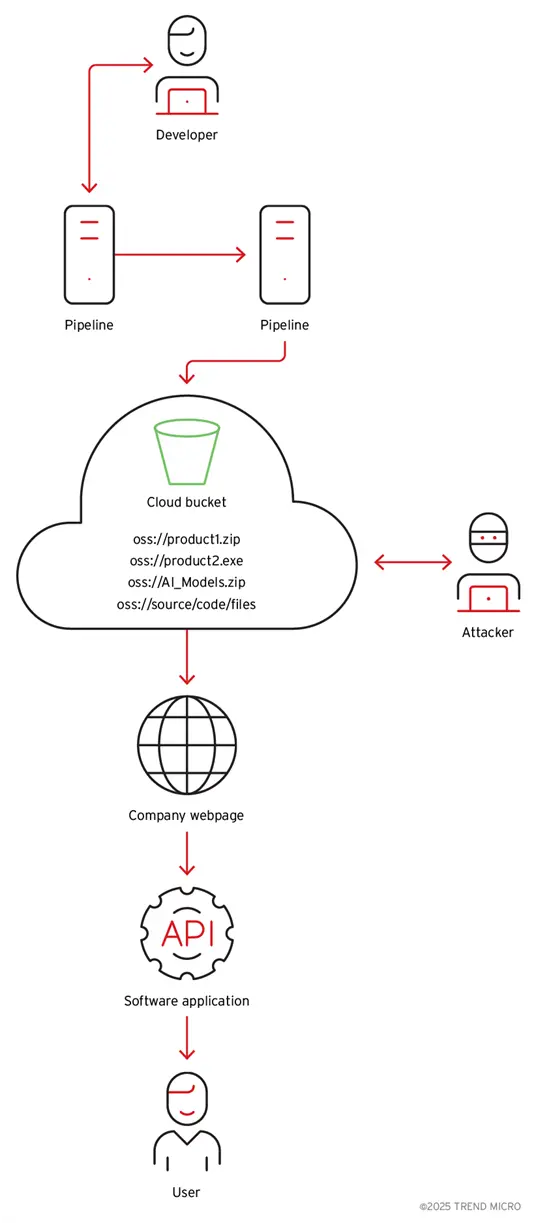

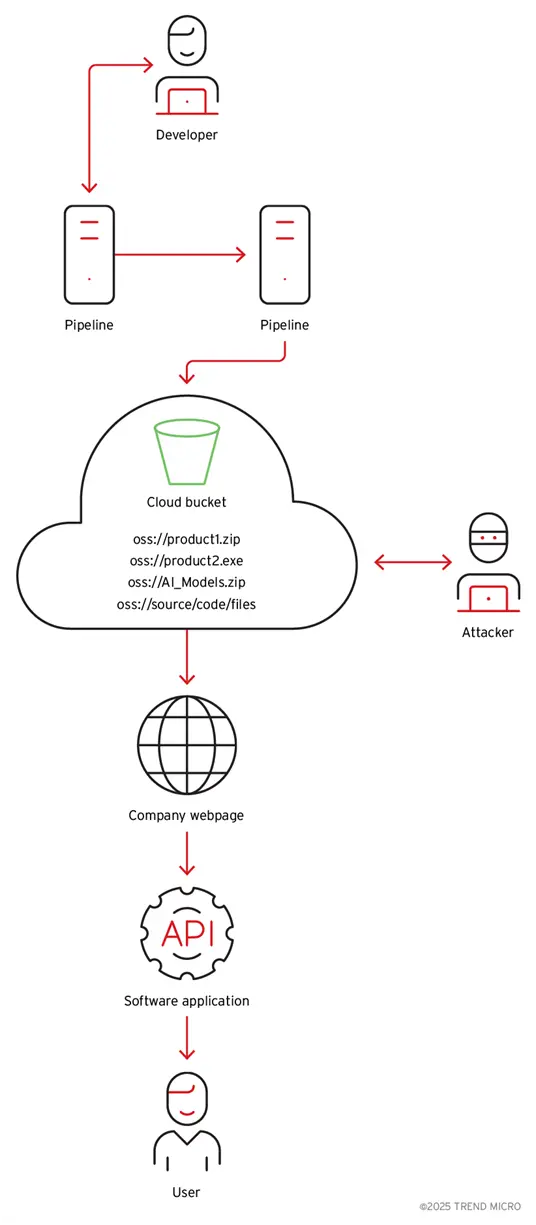

Figure 5. A planning drawing on how to distribute the two with cloud access to the user

Exposure to private data: the first critical issue

We discovered that the customer has uploaded data two years ago before storing this study by the unsafe storage service, which increases serious problems in privacy and organizational repercussions, especially under the Public Data Protection Regulation (EU) GDPR (EU), and the HIPAA accounting and accountability law (HIPAA) for the United States, or similar frameworks. Thousands of sensitive personal photos are not encrypted to customers intended to increase AI from thousands of data leaks (Figure 6).

Figure 6. Wondersharepairit Primary provides its tools

In addition to the immediate risks of organizational penalties, damage to the individual’s reputation, and the loss of competitive advantage as a result of theft of intellectual property, the disclosure of these data gives the actors also the opportunity to launch a targeted attack against business and its customers.

Supply Series problem: Treating artificial intelligence models

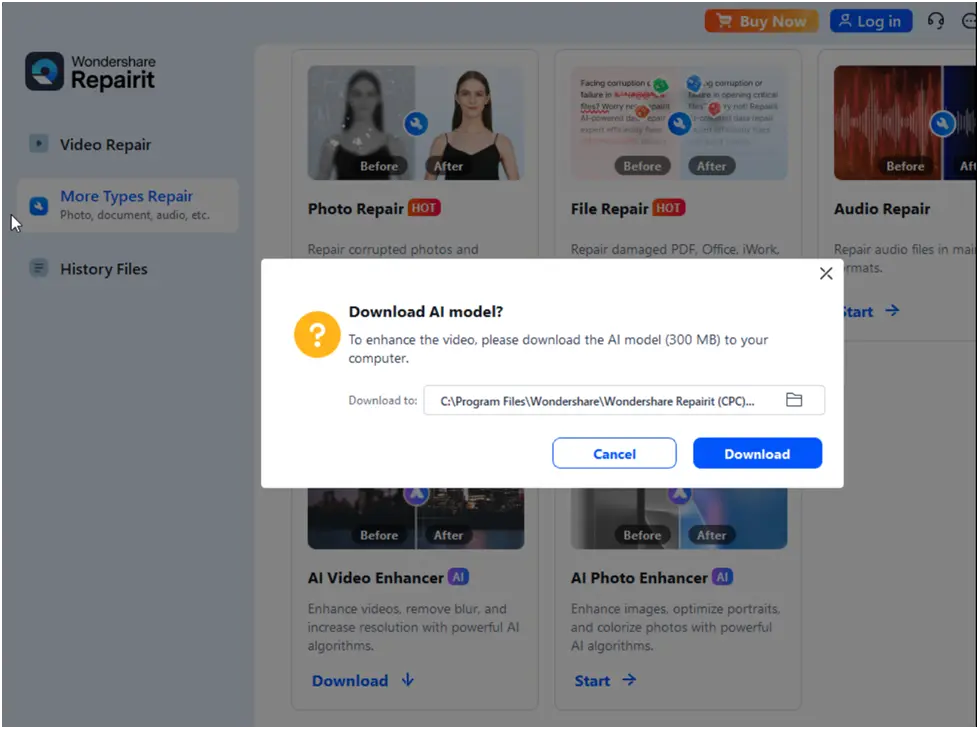

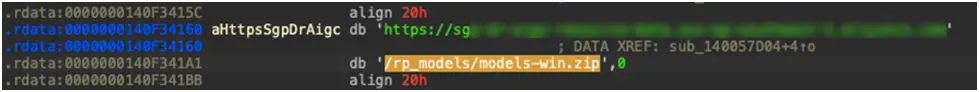

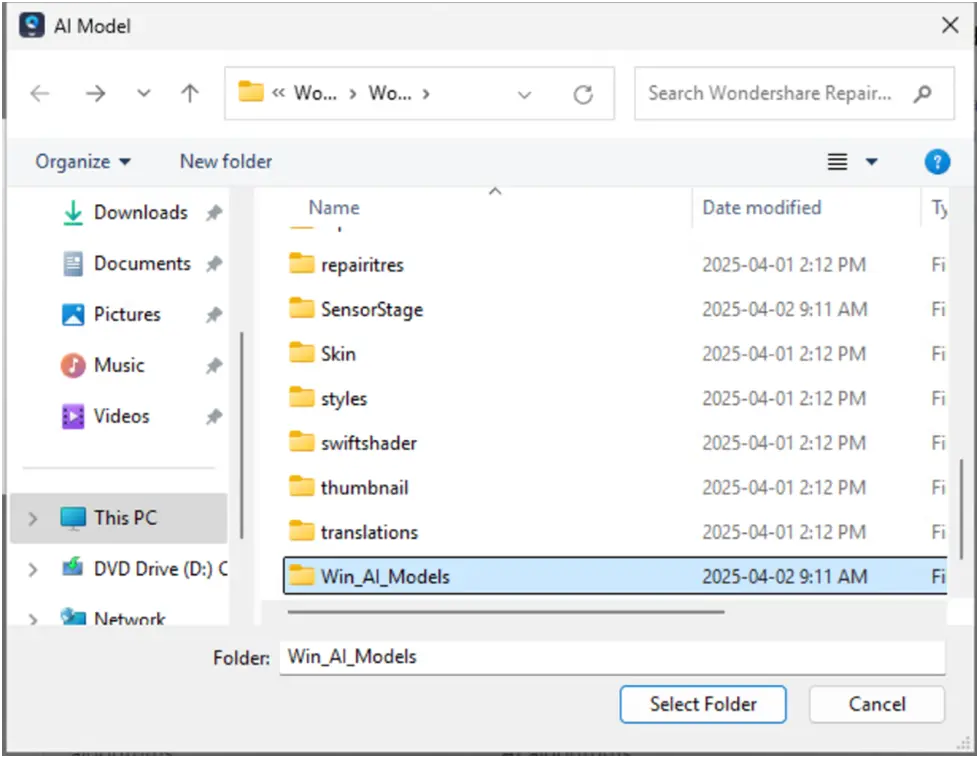

A popup window appears asking users to download artificial intelligence models directly from the cloud storage bucket in order to enable local services when they interact with Wondersharepairit (Figure 7). The AI’s ZIP file name is downloaded and the exact bulldozer address in the program (Figure 8) is entered.

The risk of an advanced supply chain attack from artificial intelligence may be more worrying than just detecting customer data. As in other situations we discussed previously, attackers may change these models or their parameters and inadvertently affect users because the duo automatically gets artificial intelligence models and run them from unsafe cloud storage (Figure 9).

This creates the possibility of many scenarios for carrying out the attack, where malicious individuals can:

- In cloud storage, switch authentic artificial intelligence models or composition files.

- Change executive programs and target their customers with supply chain attacks.

- In order to create continuous background devices, run an arbitrary code, or steal additional secret customer data, settlement models.

Figure 7. Wondersharepairit Puritan

Figure 8. Ai Model Zip Name from Aquarius to be downloaded by the two

Figure 9. The flow of the attack in which the attacker finds a bucket accreditation data and replaces the contents with harmful file

The effect of the real world and the severity

It is impossible to exaggerate the severity of such a situation, since the supply chain attack of this type may affect many users all over the world using diodes signed by legal sellers to provide harmful loads.

Historical precedent and lessons

Events such as Asus Shadowhammer Attack and Solarwinds Orion attack highlighting the catastrophic capabilities of the damaged dualities sent on the approved supply chain methods. This mode involves Wondershare repair. The risks are the same, but it exceeds the spread of artificial intelligence models locally managed (figures 10 and 11).

Figure 10. Artificial intelligence models were saved from the square bucket locally

Wide effects: beyond data violations and artificial intelligence attacks

In addition to the direct exposure to consumer data and the processing of artificial intelligence models, a number of other important concerns become clear:

- Intellectual property theft. The dominance of the market and the financial field of the company may be seriously damaged if the competitors can engineer the adverse advanced algorithms using ownership models and the source symbol.

- Legal and organizational effects. Exposure to customer data may lead to huge fines, legal procedures, and the required disclosure under GDP and other privacy regulations, which undermines serious financial confidence and stability. Tiktok was a prominent example that embodies these risks is Tiktok, which was fined 530 million euros by the European Union in May to violate data privacy laws.

- Customer confidence loss. Security takes a great time for customer confidence. Although it is difficult to get confidence, it can be easily lost, which may have a long -term long -term economic effect and lead to a large -scale drain.

- The consequences of insurance and the responsibility of the seller. Such violations have operational and financial repercussions in addition to direct fines. Financial damage is significantly increased through insurance claims, missing sellers agreements and subsequent sellers.

The bottom line

The Corporation’s wheel is included to bring new features to the market and competitive survival through the desire for continuous innovation, however they may not expect new non -discovered applications for these features or how their benefit may develop over time. This explains the importance of the security consequences. For this reason, it is necessary to create a strong safety strategy through the CD/CI pipeline and the entire institution.

In addition to maintaining consumer confidence in the solutions operating in Amnesty International, transparency about the use of data and processing processing methods is necessary for organizational compliance. Companies must ensure that their real operations are in line with privacy ads published in order to bridge the gap between politics and practice. To stay with the threats associated with the deployment and development of artificial intelligence, security protocols must be reviewed and improved continuously. The only way for institutions to protect their own technologies and consumer confidence is through strict and security criteria.

About the author:

Yogeshnaager It is a content specialist in cybersecurity and a B2B area. In addition to writing for news4haackers blogs, it also writes for brands including Craw Security, bytecode Security and Nasscom.

Read more:

Fake trading fraud, Bangaluru, made a technician losing $ 44 to the medical emergency message

About the author

An AI-Powered App Raises the Risk of Supply Chain Attacks by Exposing User Data